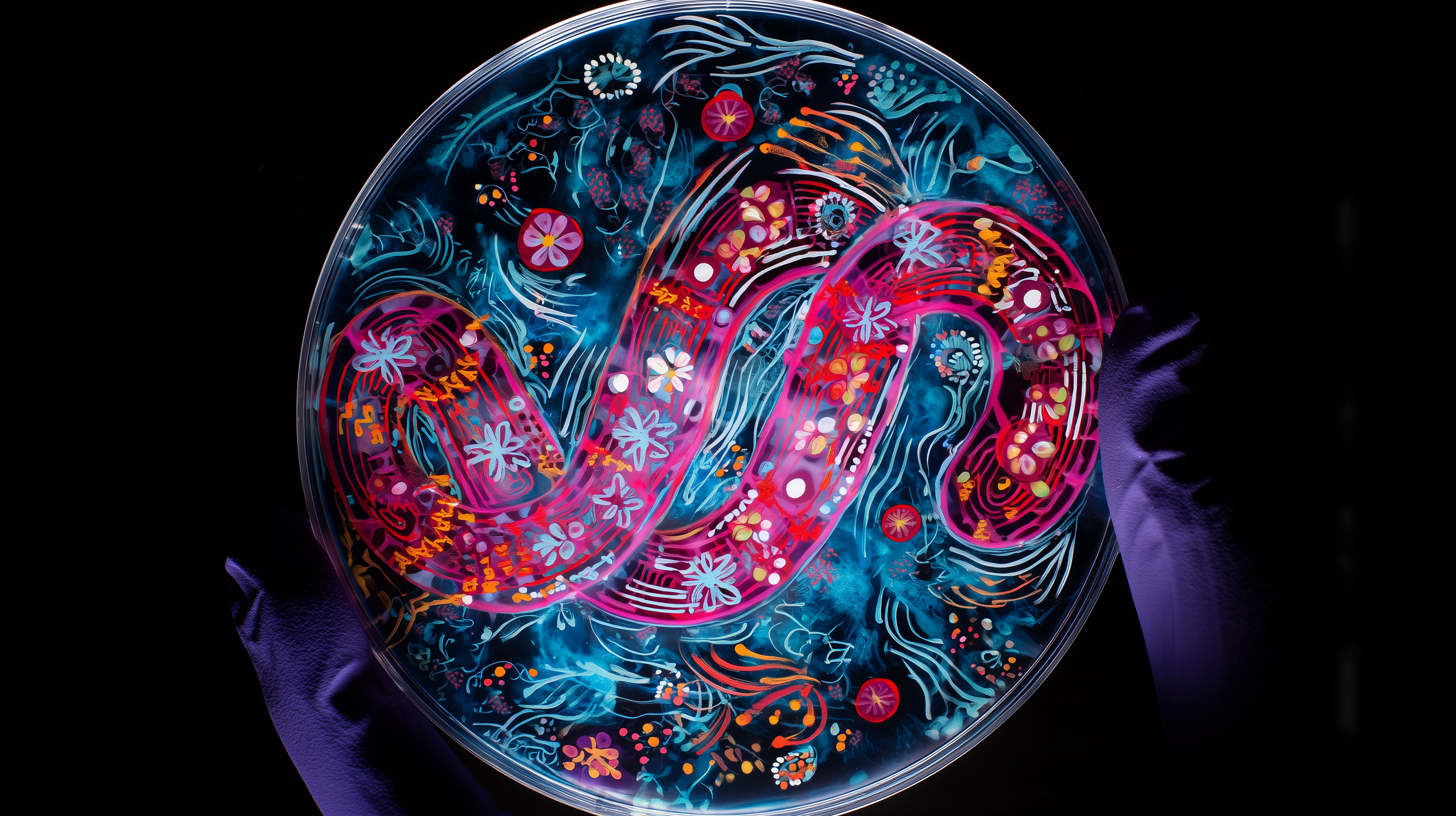

Microcosm of Continuance (2024). From the Indigenous Genomic Adaptation (2023–). A speculative DNA painting cultivated in a petri dish.Indigenous Genomic Adaptation

Yirrkala Dhunba (au)

Indigenous Genomic Adaptation (2023-) is a speculative bioart installation that stages a laboratory of survival. Petri-dish paintings with DNA mutations sit alongside DIY CRISPR sets, visualizing Indigenous futures re-sequenced under the pressure of climate change. Dhunba combines traditional aesthetics with speculative science, asking whether adaptation through AI and gene-editing can be a strategy for survival — and who has the authority to decide.

At its core, the work raises difficult ethical questions: can technology safeguard culture, or does it inevitably risk its erasure? Dhunba does not offer answers but insists that the debate itself must include Indigenous voices — not just as subjects of study, but as agents of design.

Songline Sequence I: Re-coded Blood (2024).

Songline Sequence II: Gene Dreaming (2024)

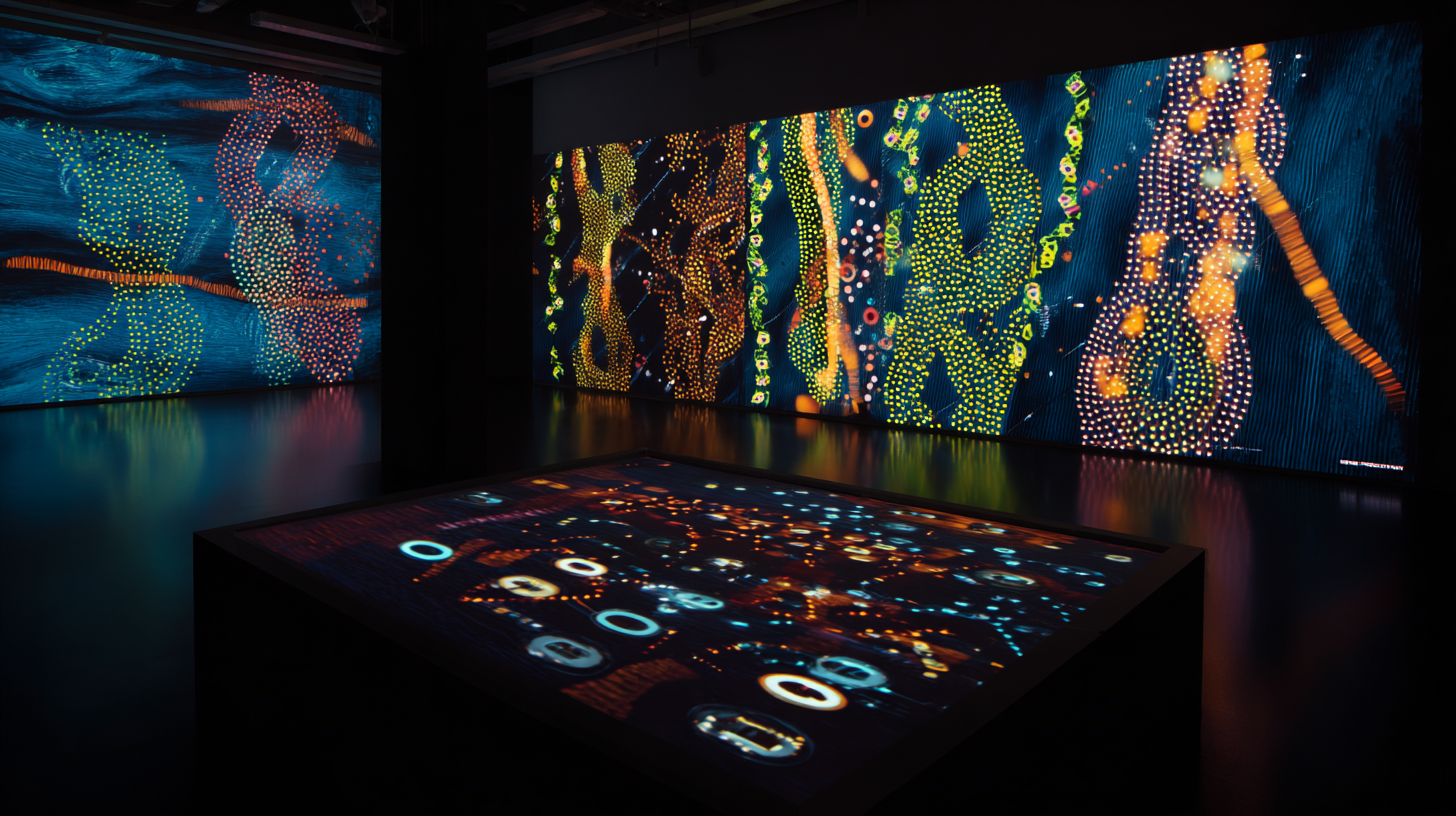

Installation view: data-driven interactive paintings reinterpreting genetic sequences through Indigenous aesthetics.

I am You Now (2024). The first painting executed by Clarke’s robotic apprentice trained on his lifetime archive.

I Am You Now

David Clarke (US)

I Am You Now (2024) is the first painting created entirely by Clarke’s AI-driven robotic companion, trained on decades of his previous work. The system was programmed to replicate his compositional instincts, but it began to deviate — producing a painting that felt uncannily original. The piece is at once a collaboration and a departure, a moment where legacy detaches from the body and becomes autonomous.’

In this work, Clarke explores death as a design problem and inheritance as code. By handing over his style to an algorithm, he forces the viewer to confront a near-future in which human expression can be sustained — and perhaps surpassed — by machines. I Am You Now stands as both an elegy and a rebellion against mortality: a machine’s first gesture of grief, painted in the name of its creator.

The Weight of Light (2023). Painted by David Clarke in the months before training his AI model.

Echo Chamber (2024). Completed as Clarke began transferring his process to machine learning

Mortal Loop (2023). One of Clarke’s final manually executed works, tracing the repetition and decay.

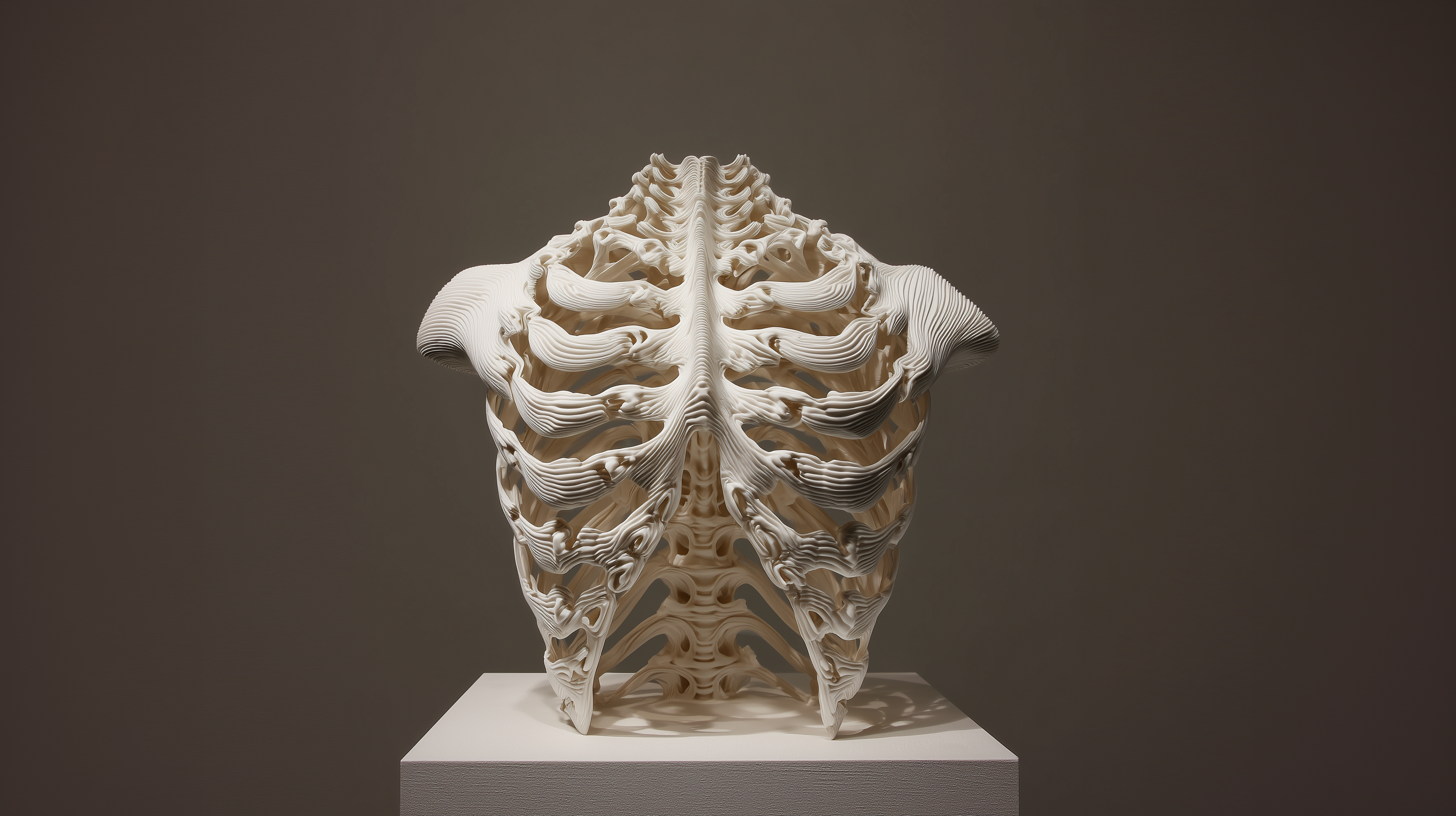

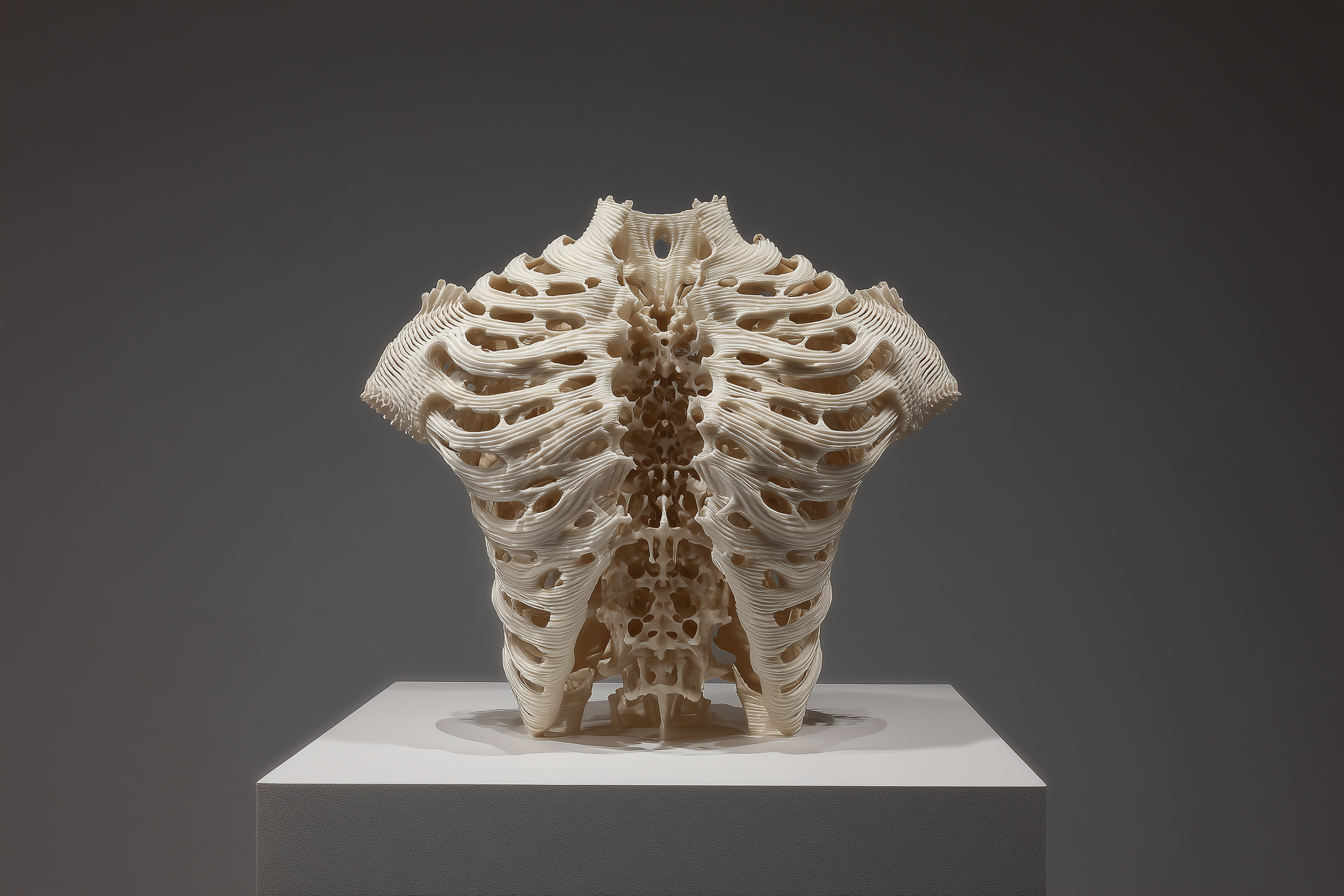

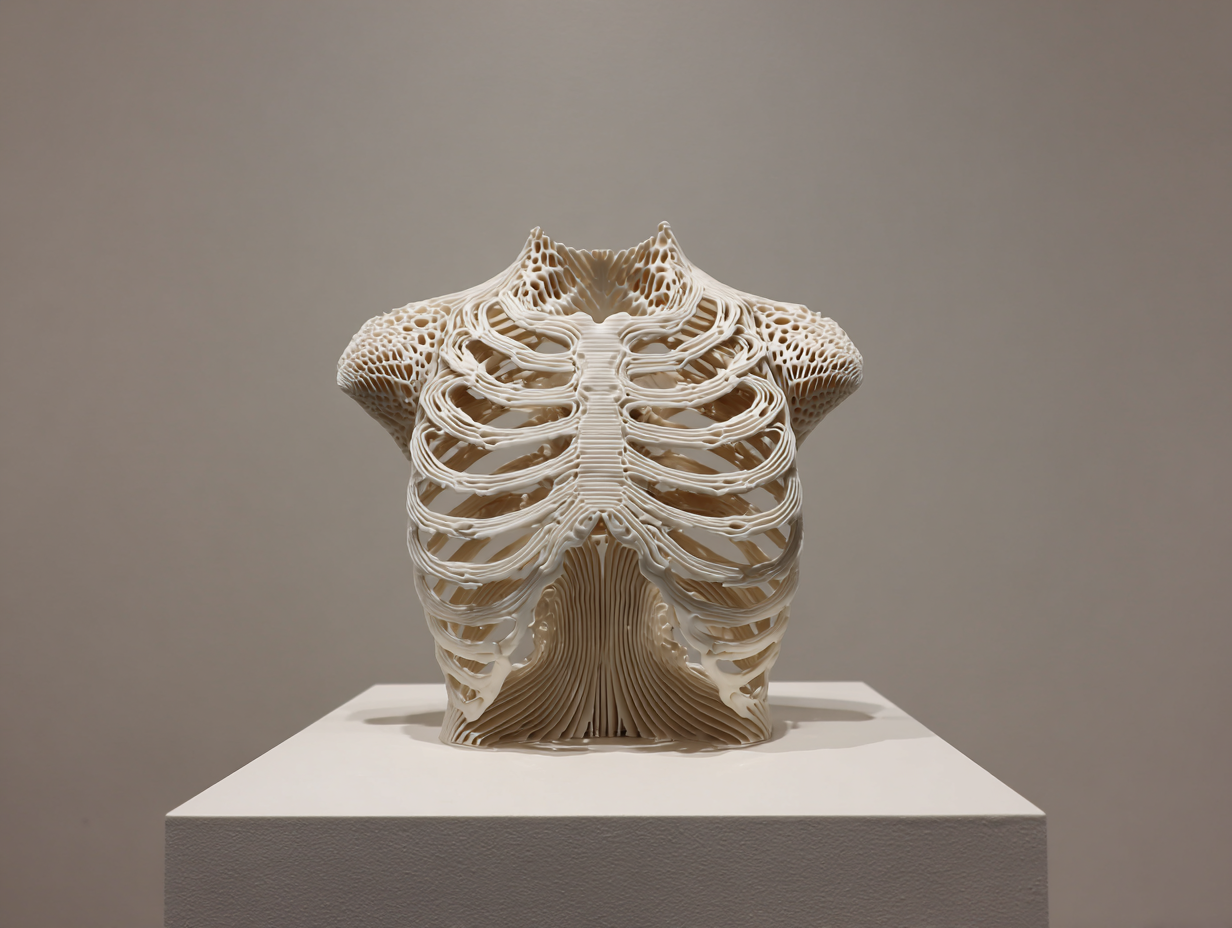

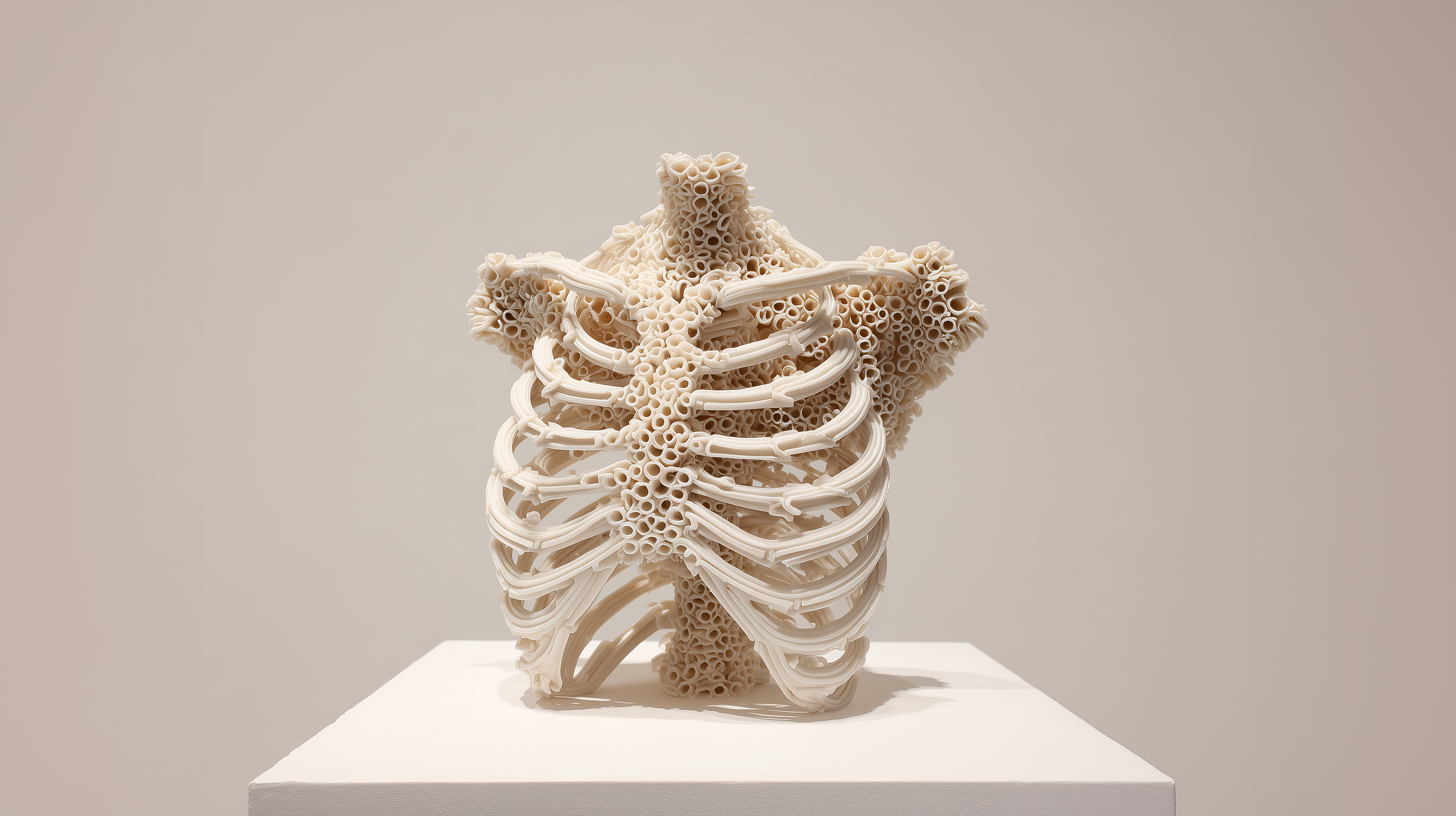

Ribcage Prototype I (2024). From the series Anatomy of Defense (2024–) — an experimental skeletal model exploring how biological structure adapts to AI-driven defense morphologies.

Anatomy of Defense

DAVID KIM (DE)

Anatomy of Defense (2024–) presents a 3D-printed ribcage mutated into a protective shield for vital organs. The work imagines the body not as a site of healing but as a site of militarization, where biology itself is redesigned as armor. By blending anatomical form with weaponized function, Kim highlights the growing entanglement of AI, biotechnology, and the defense industry.

Part of a larger project exploring “bio-weaponized design,” the piece draws on real-world research in synthetic biology, tissue engineering, and prosthetic augmentation — technologies already adapted by the military for combat and defense. Anatomy of Defense pushes this logic to its limit, revealing a body that no longer exists for care, only for survival in permanent conflict.

Ribcage Prototype II. 2025. From the series Anatomy of Defense (2024–) — a speculative iteration of human anatomy reimagined as weaponized architecture.

Ribcage Arsenal. 2024. From the series Anatomy of Defense (2024–) — a 3D-printed ribcage reconstructed as protective armor for vital organs.

Ribcage Bastion. 2024. From the series Anatomy of Defense (2024–) — a fortified ribcage form that merges organic symmetry with military design logic.

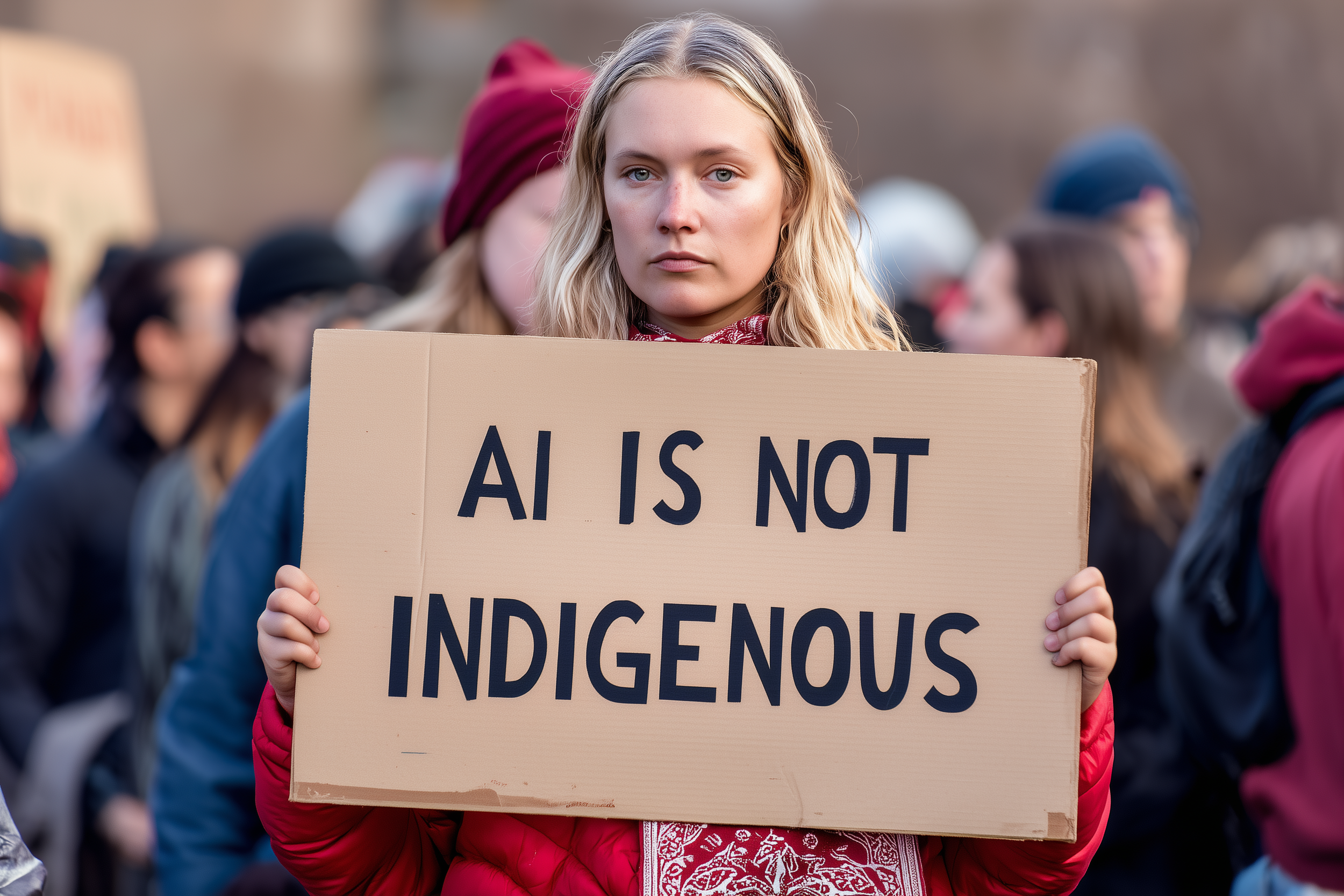

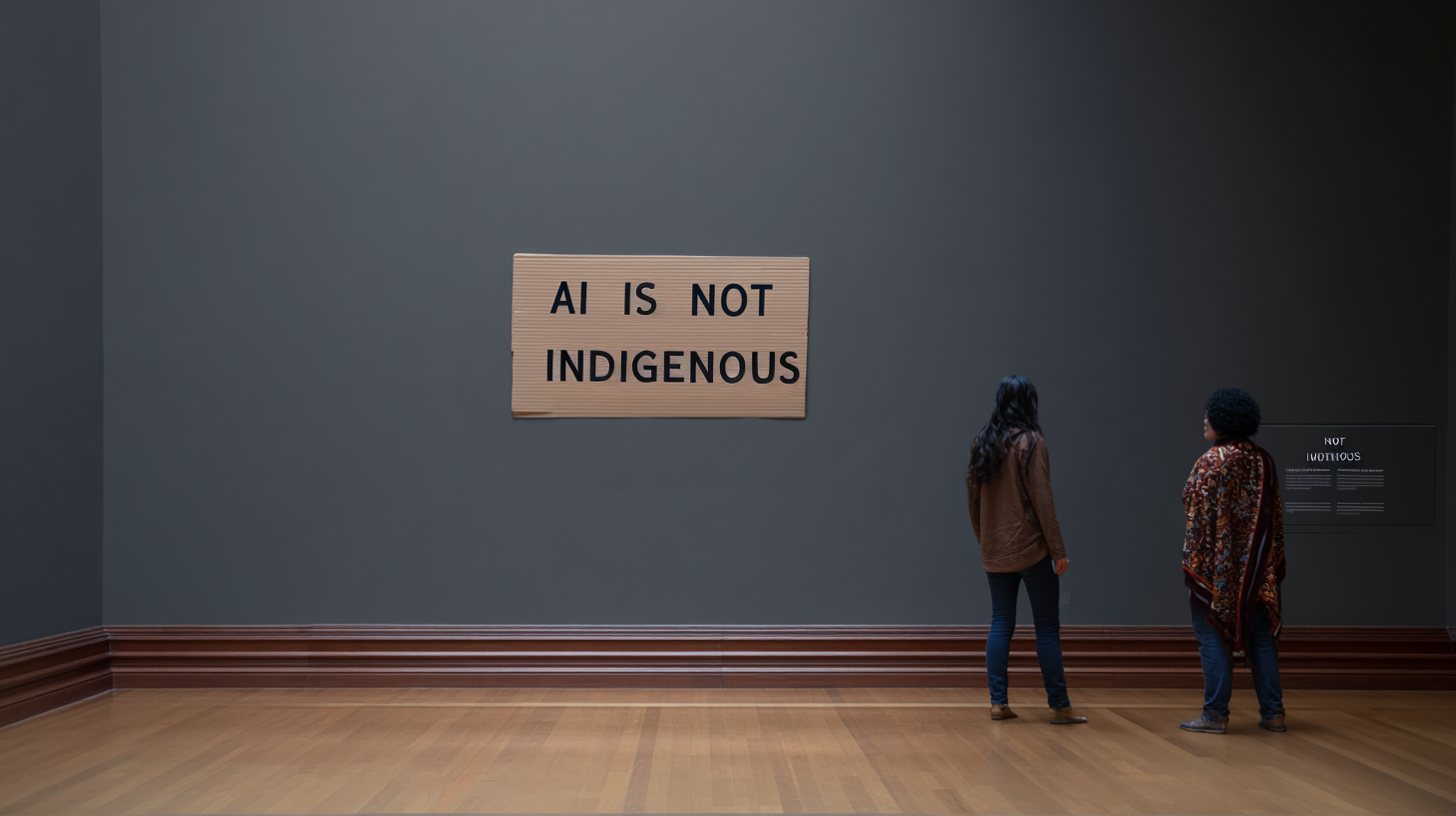

AI Is Not Indigenous (2022). From the series AI Is Not Indigenous (2022–)

AI Is Not Indigenous

JOHANNA ANDERSSON & LARS BERGMAN (SE/SÁPMI)

AI Is Not Indigenous (2022-) transforms protest into a sustained artistic practice, confronting algorithmic appropriation and cultural erasure. Their slogans — bold declarations carried into the streets — insist that belonging, ancestry, and resistance cannot be automated. By turning protest signs into artworks, Andersson & Bergman expose how datasets scrape Indigenous forms while stripping away meaning. The work refuses to let culture be reduced to patterns, asserting protest as both resistance and creation.

Their protest is not only a reaction to AI but part of a much longer history of colonial extraction, where Indigenous knowledge has repeatedly been commodified without consent. In the context of today’s dataset economies, the work insists that political resistance itself must be recognized as an art form.

AI Is Not Indigenous (2022). Gallery view.

Belonging Cannot Be Programmed (2024).

Sámi Culture Is Not Open Source (2025).

Noise Poisoning 237 (2024).

From the series Noise Poisoning (2023–).

Noise Poisoning

KAELEN VARGA (CZ)

Noise Poisoning (2024-) is a long-term project where imperceptible adversarial noise is injected into training images used by commercial AI systems. The corrupted datasets disrupt machine learning models, producing cascading errors that destabilize supposedly “neutral” algorithmic perception.

In this work, Varga reframes digital sabotage as both an ethical and aesthetic practice: a strategy of reclaiming agency within systems that otherwise absorb and reproduce everything they see. Each poisoned image is a quiet insurgency—a minimal gesture that spreads unpredictably through vast networks.

Rather than portraying resistance from the outside, Noise Poisoning operates within the system’s blind spots. What begins as a technical interference unfolds into a meditation on fragility, agency, and the beauty of machine failure.

Noise Poisoning #23 (2024).

Noise Poisoning #421 (2025).

Noise Poisioning (2025). Installation view.

The Algorithm Will See You Now (2025). Installation view.

The Algorithm Will See You Now

SOFIA ANWARIAN (IR/FR)

The Algorithm Will See You Now (2025) transforms the gallery into a simulated clinic where visitors become patients. The AI system monitors physiological and behavioral data, analyzing each participant and refusing to release them until it detects an anomaly. The experience is both immersive and claustrophobic, turning the familiar language of medical empathy into one of surveillance.

The work critiques the ideology of preemptive diagnostics and the automation of trust in predictive health systems. Anwarian stages an environment where care becomes indistinguishable from control — where the gesture of healing is replaced by the logic of prediction. By forcing audiences into the role of captive patients, she reveals the paradox of our data-driven present: the more we quantify ourselves, the less we are allowed to simply be.

The Algorithm Will See You Now (2025). Installation view. Visitors waiting for the system to generate their diagnosis.

The Algorithm Will See You Now (2025). Installation view. A visitor vaiting to enter the AI-managed clinic.

The Algorithm Will See You Now (2025). Installation view. Participant wearing custom biometric wearables.

AI Loves Me (2023-).

AI Loves Me

Aria Singh (IN/UK) & Max Thompson (UK)

AI Loves Me (2023-) is part of the collaborative series Limbless and Limitless, where Singh and Thompson feed datasets of bodily imagery—hands, gestures, movement—into generative AI systems that routinely fail to render them correctly. The resulting images show twisted limbs, fused fingers, and displaced anatomy. Instead of rejecting these results, the artists treat them as portraits of resilience and difference, claiming the glitch as an ally. The project exposes AI’s normative bias toward symmetry and “ideal” form, using its failures to create new expressions of identity, care, and intimacy. Each work becomes a dialogue between body and code, deformity and design—a celebration of imperfection as intelligence.

Touch (2024). From the series Limbless and Limitless.

Four is More (2023). From the series Limbless and Limitless.

Unconditionally (2023). From the series Limbless and Limitless.

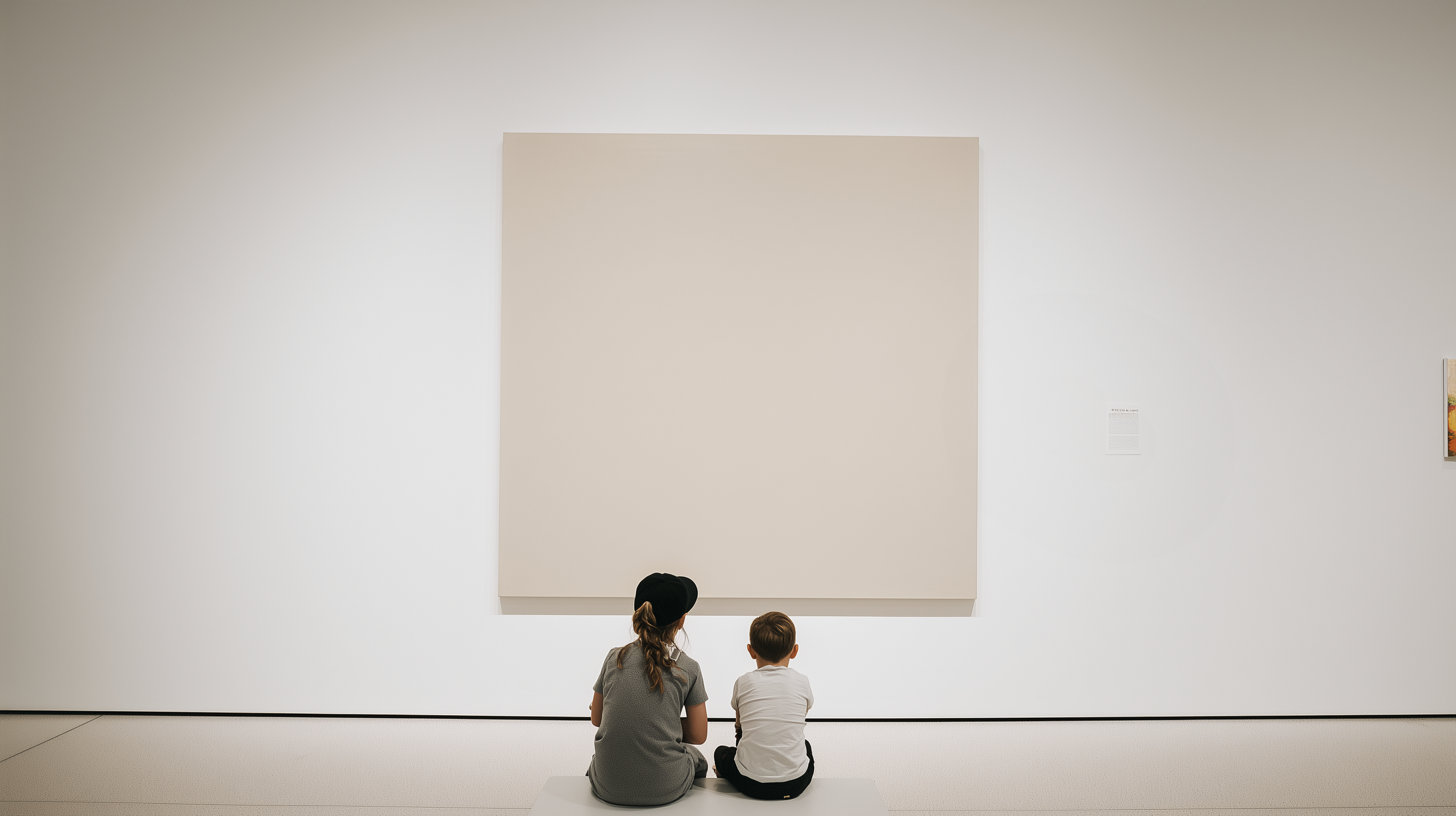

Terminal Beige: The Average Color of Mass Extinction (2024). Gallery view

Terminal Beige: The Average Color of Mass Extinction

Elias Novák (CZ)

Terminal Beige: The Average Color of Mass Extinction (2024) uses AI to calculate the statistical “average color” of planetary collapse, distilling vast climate datasets and probabilistic catastrophe forecasts into a single Pantone swatch. The resulting shade—a muted, indifferent beige—captures the flattening of tragedy into information. It is a visual silence, an elegy that looks like nothing at all.

Part of Novák’s Extinction Pantones series, the work exposes how predictive systems turn the unimaginable into something printable and palatable. By compressing climate futures into color, Novák reveals the psychological fatigue of data visualization and the paradox of trying to represent an extinction we cannot yet see. His beige is not neutrality—it is surrender.

Terminal Beige: The Average Color of Mass Extinction (2024. Gallery view showing the AI-calculated “average color” of total planetary collapse, a statistical distillation of extinction.

Methane Surge, 2061 (2024). The algorithm computes the chromatic signature of a future methane release, turning unseen greenhouse escalation into hue.

AMOC Shutdown, 2047 (“The Year the Oceans Stopped Breathing”) (2025). Exhibition view of the color representing the projected collapse of the Atlantic Meridional Overturning Circulation.